- Recording Date: 10/23/2024

- Topic: SQL Server Performance

- Hosts: Steve Stedman and Shannon Lindsay

Stedman SQL Podcast Ep. 5 – Performance

In episode five of the Stedman Solutions SQL Server podcast, Steve Stedman and Shannon Lindsay discuss common performance issues in SQL Server. They highlight the importance of indexing, emphasizing the need for proper index creation and avoiding multiple identical or single-column indexes. They address the impact of functions in the WHERE clause, excessive use of cross apply and correlated subqueries, and the necessity of regular database maintenance. Stedman also explains the significance of SQL Server isolation levels and the benefits of READ COMMITTED snapshot isolation. The episode concludes with a Q&A on SQL Server isolation levels and their impact on data.

Watch / Listen Now

Podcast Transcript – Performance

Steve Stedman 0:16 Hey everyone, welcome to this week’s Stedman solutions SQL Server podcast. This is episode number five on October 23 I’m your host, Steve Stedman, and I’m assisted today by Shannon Lindsay,

Shannon Lindsay 0:29 hello, everyone.

Steve Stedman 0:31 All of our previous episodes of the podcast are available on the Stedman Solutions website, if you want to go back or interview those at any time, or they’re available on YouTube also. So some Stedman Solutions team news before we jump into this week’s topic, and we’ve got a database health monitor update that’s coming out next week, and then two weeks from now, George and Mitchell, the developers on database health monitor, will be on the podcast to discuss some of the cool new features. If you’ve been following last time we had database health monitor update, it was a lot of things related to the quick scan report and some other checks like that. But this time, there’s a whole lot of new things that are being tracked, new charts and new historic monitoring. So some cool stuff there that they’ve been working on for about a month now, and that’ll be available hopefully in the next week. Also keep in mind that November is a couple weeks away. Wow, where did this month, or where did this year go? And November, the whole month, through Black Friday and even the end of the month, we have some crazy prices on our different SQL Server, classes such as 50% off, and even some classes for as low as $2 or $3 for a whole month’s worth of crazy deals. And all of our classes are going to be featured on someday during the month as being on sale. So if you’re thinking of want to learn something about SQL Server, you can check that out, whether it’s our new SQL Server replication course, or interview questions, or common table expressions class, or even some of our shorts, like the aggregation class that’s coming, starting on November 1, every single day of the month, we will have a different deal. So check in on the Stedman Solutions website or blog post at Stevestedman.com for what’s happening there. So one of the things I want to since we’re still new, we’re only in Episode Five, now, I just want to let people know that places you can watch or listen and watch us on our YouTube channel, and that’s if you go to YouTube and search on my name, Steve Stedman, you can find our full podcast episodes there, or you can go to Stedman solutions.com/podcast, and from there get a list of all of the previous podcasts. It takes about four or five days once we record the podcast before we get it up on the site there. So just keep in mind that if you really want to go back and watch this one, you can have to go directly to YouTube to see it right away. So now on to this week’s topic.

Shannon Lindsay 3:03 All right, this week we’re talking about performance issues. So in regards to that, what are some common performance issues that you come across?

Steve Stedman 3:16 Oh, wow, that’s a good question. I mean, we get called in for a lot of different performance things. And sometimes, yeah, sometimes they’re performance issues with a vendor supply application. Sometimes there are performance issues with in house applications, and it usually kind of falls into one of those two areas. And if we have a client where they have their own in house application, where we have their development team that’s built it. It’s usually nice, a little bit nicer because we’re able to go and share and teach with them how to do the things to make the queries run faster, and able to let them make those changes in the application when they’re needed. Other times, when we’re dealing with vendor supplied applications, when we’re dealing with vendor supplied applications, we run into like limitations, like certain vendor applications won’t let you add an index in the database. But then other times, we work with vendors where we found an application that was performing really, really poorly, we added a half dozen indexes and showed how much faster their application could work, and the client sent that back to the vendor, and the vendor ended up including those in their application going forward. So kind of the two different areas are in house versus vendor applications, but what we see between the two of those is pretty common, where we see a lack of indexes or a misunderstanding or misuse of indexes, and that can be how the indexes are created, or how they’re even with indexing hints and things like that, but that’s one of those things that we can help with quite a bit. We see a lot of functions in the where clause, and what this means is. Instead of saying like where to Column A equals something, it’s actually a function call on it, like where length or where date add, or something like that. And there’s a lot of work that needs to be done on those. We’ll cover that a little bit later. We oftentimes see excessive use of cross apply, cross apply, or even outer apply. It’s it’s a good feature, but when it’s used too extensively, or used without really understanding how many rows are being impacted, it can be really expensive on the performance front. On that same point, we see a lot of correlated sub queries, where you’ve got queries that are being run over and over and over again, or some queries are being run over and over over again inside of your queries. And then the common thing we see, too, normally, the first thing we look at is a lot of blocking and blocking. And additionally, we oftentimes see that somebody’s having performance issues with their database, and it turns out they haven’t been doing proper maintenance for some time. So that’s kind of the the general stuff that we typically see. And then there’s a lot of just kind of off. I mean, not so much mainstream type things that we see that we’ll deal with as well.

Shannon Lindsay 6:06 Yeah, you mentioned indexes. So relating to that, what are some of the issues that come across with lack of indexing and kind of misunderstanding them?

Steve Stedman 6:18 this is one of those that I don’t like to blame the person that’s doing the indexes wrong. I always like to blame Microsoft, because they didn’t really explain how the indexes should work very well to this person, or whatever it may be. So but what we run into is we run into cases where we have, oh, a number of different trends here. One of them would be multiple identical indexes, or, let’s say you have four or five indexes on the same table, on the exact same columns, and exactly identical with that. Having those multiple indexes does two things to impact your performance. One of them is that it impacts the query optimizer, because the query optimizer has to look at each one of those and say, Well, is this one going to be better than that one? But it also means that whenever you’re doing inserts, updates or deletes, that they’re all going to be a little bit slower, because each one of those multiple indexes is going to be updated, and having two or three or even five or 10 indexes on a table that are exactly the same gives you no more value over having that single index there. The other thing we run into is where somebody will learn a little bit about indexes, and they’ll put single column indexes on every column. So let’s say you’ve got a table that has 15 columns in it, and instead of creating one index that’s going to help with a lot of your queries, they’ll go and create 15 indexes, one for each of the columns, thinking, well, if I have an index on each one of those columns, it’s going to help me in all situations. But the problem you run into with that is that the query optimizer will only pick one index per table that it’s working on in order to be able to use that to bring back your data as efficiently as possible. So if you’re going to select from a table where you’re checking three or four different columns for certain values, if you had an index on all three or four of those columns, it might give you a quicker lookup than if you then if it was only able to use index on one of those columns, and SQL Server will probably pick the best column when you have those single column indexes, but it’s not going to give you the value of having multiple columns in an index. Now, with indexes, keep in mind that index is just a copy of those certain columns ordered in a certain way that will help you get to the data quicker. And if you have 15 columns and 15 separate indexes, you’ve got 15 copies of all that data, at least from each column, and only one of those is ever being used at the time. Well, maybe you’re not getting the most value out of it. The other thing we run into there is somebody will have a single index on all the columns. Again, you learn a little bit about index, and you say, oh, I’ll just create an index on 15 columns in the table, and all 15 or when you’d be indexed, what you can end up with that is that your index can actually be larger than the table itself. When you’ve got those all indexed, and it’s it might help you in a couple of queries, but very rarely would you need all 15 index or all 15 columns in that example, in the same index, and you end up with a lot of over and a lot of maintenance around that. The other thing we commonly run into around that is the DTA indexes are, these are what the Microsoft database. Tune in advisor tells you to create. And if you’re running a database, tune in advisor. Step one, I would say, is just stop. Don’t use it. Don’t listen to it, don’t believe it, because the database tuning advisor comes back with these really big indexes. And when I look at the indexes the database tuning advisor creates, it’s very similar to what we talked about with the single index and all columns, where it’s trying to put as much stuff into that index as possible. And I almost. Think that the database tuning advisor is a way to push people to create inefficient indexes to make their databases bigger. I don’t know. I’m not really a big conspiracy theorist, but it seems like that the indexes that you get out of the database tuning advisor don’t always help, and they actually hinder, in my experience, more often than helping. The other thing we see is that when developers are using the execution plan and they get a suggested index, that they’ll add every single suggested index that they see. And sometimes it’s better than adding another index that’s almost duplicate to another, is to just change the original one or drop their original one and add a new one. So there’s a lot of ways that people end up creating indexes in their databases that don’t really help and oftentimes may hinder. So that’s one of the things that we can take a look at and find those things and come up with the right indexes when people have the wrong ones in place there Shannon Lindsay 10:57 with locking and blocking. How might that impact somebody’s performance issues or create them? Yep. Steve Stedman 11:04 Well, this is one of those things that I don’t know. It’s probably one of the worst performance type things that we run into is when you’ve got excessive blocking or locking. And it’s not as bad as a deadlock, but it’s the point that you’re you might have someone doing an update or doing work on a table where it’s locked for a minute or two minutes or five minutes, even or longer than that. And while that’s happening, nobody else can query or use that table. What we commonly see in a weight type with that is the LCK, underscore, M, underscore IX, weight type, which it means that there is an exclusive lock that is being waited on. And an example is you do an update on a table and maybe do an update with a join to be able to pull some other data in, and it’s a really long running query, and let’s say that update runs for five or six minutes. Well, while that’s running, nobody else is able to and it depends on the isolation level, which we’ll talk about a little bit later. Little bit later, but when it’s running, nobody is able to really access or do any other updates or inset inserts or deletes on that table. So what we usually want to look on on that is, how do we make that run faster again? Might come back to indexing at that point, or it might come back to something else. Another thing we see is that an update inside of a transaction that hasn’t been committed yet. So you open a transaction, you do an update, and then you do something else that takes some time to do some processing, and then later you eventually commit the transaction. While you’re waiting for that transaction to finally commit, there’s blocking going on on all those tables that you did updates with along the way. So one of the things we can do there is oftentimes queue up the work, or maybe do all the heavy lifting ahead of time, and then open the transaction and do a minimal amount of work inside of the transaction, and then commit it. Another locking and blocking related thing that we run into a lot is updating more rows than you really need to. So for instance, let’s say you’re updating a row and setting a value that may already be set. So let’s say you have, I don’t know, a process date, and then a flag that’s a Boolean kind of flag that says is processed. And if you’re doing an update that says, update, your table set is processed equal one, or the process date is in the past, something like that. Well, it could be that that starts out with a small number of rows, but as a table grows over time, you end up doing that update 1000s and 1000s of rows, or millions of rows. And what I like to do in that case, instead of say, do the updates that the is processed equal one, in this example, where the process date whatever the previous WHERE clause was, and where the process date does not equal one, and then what you’re doing is you’re saying, only update the rows where the process date is not already set. And that’s one of those inefficient things you run into a SQL Server, is that it will let you update a row or a column in a row to be exactly the same thing it was before. So really there’s no change, but the amount of work that’s required through the transaction log and being able to write the data, page, disk and all that, it’s just the same as though you were actually changing it. So if there’s a way to make it so your update statements are only really updating when something needs to change. That can make a big difference. There’s a client we worked with where they had a website, and on every single web page view, there was something they were doing with an update like that. I forget what it was tracking, but we were able to just put in the where clause and say where the value doesn’t equal what we’re trying to set it to, and it eliminated almost all of their blocking by doing that update on that one where clause. So there’s a lot of things that we run into with locking and blocking, but usually it’s somebody that’s making a change or doing an update that’s either causing it to do more work than it should, or that’s taking. Longer than should, and we have to figure out, how can we make those things faster?

Shannon Lindsay 15:02 How does like, lack of database maintenance impact performance on queries?

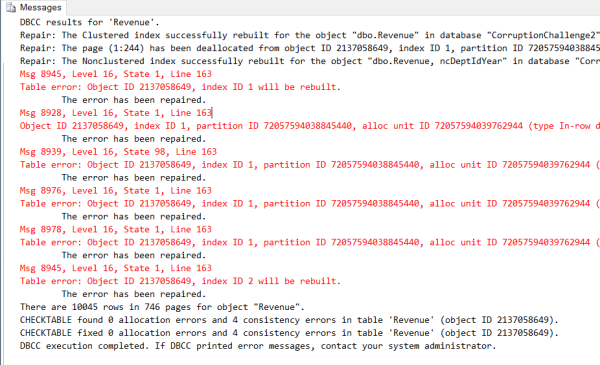

Steve Stedman 15:11 Oh, yeah, this is another good one in that a lot of people who may not be full time SQL Server experts, or maybe there are people who just were assigned the job of installing a running SQL Server. They may set up their SQL Server, and then vendor may tell them you need to install these applications, and then you just expect that SQL server to run perfectly forever. But what it turns out is that there’s a lot of things that need to be done that kind of refer to it as the proper care and feeding in your SQL Server to keep it running well over time. Some of those things that we see are like missing or out of date statistics, which can impact the speed of a query significantly, things like transaction logs growing excessively, doing maintenance at the wrong time of the day, these can all impact performance. So one of the things with statistics is that a lot of people get caught up on index defragmentation. And yes, index defragmentation is a good thing, but I think that updating statistics from what I’ve seen is far more important than defragmenting indexes, because statistics give the query optimizer clues about how much data exists in different tables, so that when a query is being run, it can say, Should I do an index seek or a scan, or do a full table scan, even depending on how many rows are there, if your statistics are out of date, and SQL server thinks that you’ve got a small table when you’ve actually got a really large table, that can really change the plan that’s being used when your queries are being run. Another thing is that scheduling maintenance, things like rebuilding indexes and statistics and doing those at a time where they may be needed, for instance, you might say, well, we’re a eight to five kind of shop, and we’re going to run all of our maintenance at 3am and if you do that, that’s great, if you know that nobody’s using a server at that time. But if you have a situation where you are rebuilding all those indexes and statistics and doing other jobs like that at 3am and that’s the same time that you’ve got a data extract, extract running, or some warehousing running, or something that’s putting a lot of load on your server, those things can be really slowed down. So it’s good to be able to capture and look at, well, what’s happening at those times. So you can see, well, maybe I am rebuilding indexes and statistics at the wrong time, or I’m running check dB at the wrong time, or I’m doing all my full backups at a time when there’s a lot of load happening. So it’s really important to understand which jobs are being run when, and also what’s, what are the kind of the peak times for IO and CPU and stuff like that in your database, so that those maintenance jobs can be run at a point in time where there’s the least impact on other systems, where Shannon Lindsay 17:57 functions In the where clause an issue. Oh, Steve Stedman 18:02 this is one of those things that it can be, I don’t know, a little bit misleading, and that if you’re saying, select from a table where your zip code column equals something, well, that’s the thing that SQL Server can index and can get there quickly. But if you are selecting from a table and saying where the length of the zip code equals five. For instance, let’s say you’re looking at us zip codes, and you’re trying to rule out the zip the five codes, or find all the five code zip codes, versus the five plus four or the nine digit ZIP codes. If you put in, let’s say you’ve got a table with 5 million rows, and you in the where clause, you say, where the length of the zip code equals five Well, in that case, you’ve got to call that length function on all 5 million rows. And even if that length function took a 10th of a second, sorry, a 10th of a millisecond to run, it has to be run 5 million times, once for every single row, and even a 10th of a millisecond being run 5 million times can take a very long time to run on on those bigger tables. Now, one of the things you could do in that case, if that was something that you had to regularly check for, is maybe set up a computed column, or perhaps have a column that with a trigger on it, that every time the zip code field changes, you have a column that updates to say how long it is. For instance, if that’s something that’s commonly being used, we’ve seen examples where, where date add is being used to calculate. Well, is this date event? Date somewhere within so many days of our current time? Well, if you’re saying, for instance, if you’re trying to take 5 million rows and do a date, add and add two days to it to determine is it within two days of today, and then comparing that to the day, that’s really inefficient, that could take many minutes to run, whereas, instead, if. Said where that date is is greater than or less than date add of two days from today. That could be a lot more efficient, because if you’re doing it on a fixed field, like today, or a specific date and time like get date, well in that case, you’re only doing it once, instead of doing it 5 million times, if your table has 5 million rows in it, be lot more efficient that way. We’ve worked with clients on this where. And if you’re one of the clients we’ve worked with on this, and you’re listening to this and you think, oh, boy, we’re talking about you. No, we’re not talking about you. Because we’ve worked on this with so many similar thing, with so many different clients that we’re always talking about the other one. So with that, when we work with clients where they’ve got a slow running query, where it really is bogging down their entire system and impacting overall performance, and we find that, yeah, after they do be that there’s some function in the where clause, and we change it around the logic a little bit, and it’s doing the same work, but we’re able to have it run like 100 or 1000 times faster just by not doing so much work, by calling that function that many times based off the rows and all in that table.

Shannon Lindsay 21:12 Another question, what are correlated sub queries, and how can they be an issue?

Steve Stedman 21:19 a correlated sub query is is it’s a sub query inside of your main query where it references something that is changing on every row, for instance. So let’s say you’re selecting from a table called orders, and then you have a sub query that says, give me the count, select, count of the number of rows in order, items for that order? Well, that has to be run n times, where n is the number of rows in the Orders table, meaning that if you have 5 million orders, and you’re just trying to get to count for each one of those, it’s got to be run 5 million times in that case. So similar to the functions where it’s it’s a multiplier, because the work is being done once for every single row. Now let’s just take that orders and order items example, where you need to know how many order items there were, if you just did a single big query that scanned the Order Items table and grouped by order ID and gave you account and then just join that to your orders table that can give you the same results without being a correlated sub query, meaning it’s one longer running query for the one time it runs, but it’s faster than running a fast a small query like five or 10 million times. So we’ll always want to watch out for those correlated sub queries. Typically, where we see them is either in the where clause or in the select columns. So like in the example of order, I of the order item count that I just talked about, it would that example was they were selecting from the order table, and then in the columns that were being returned, it said, select count from the Order Items where the order ID hold something, and again, that’s one of those that can really bog down a query when you’re getting into a big number of rows. And it might be that it works great in your development environment, or it might be that it works great on small, smaller tables or smaller data sets, but then you get that one client that has a whole lot, or that one customer that has a lot, whole lot whole lot more data in the system, and everything bogs down with it. So just, I mean, I’m not saying don’t ever use function in the where clause, or don’t ever use correlated sub queries. What I’m saying is that if you have a performance issue, take a look at those things, because those are kind of common things that jump out that could be really impacting overall performance.

Shannon Lindsay 23:40 What is cross apply, and can too much of that cause an issue too?

Steve Stedman 23:47 So really two things here, there’s cross apply, but then there’s also outer reply. And these, if you look at my join types class, this is one of those things that I include in the join types class, because cross apply and outer apply are basically a way of doing a join to a sub query, or do a join to a function. And similar to correlated sub queries, where you’re doing this once for every row in the result set, the cross apply, and the outer apply. Whatever you’re connecting to there is being run n times, where n is the number of rows in the table. And again, that’s one of those things that may work great in a small set of data, but if you’re doing that in a situation where it’s being run on hundreds of 1000s or millions or 10s of millions of rows, it can be get really expensive and really slow down the overall SQL server performance. Now, just like the others, I’m not saying don’t use those. I’m saying Just make sure when you are using those, that you’re aware that it’s being the thing that’s inside of that cross supply or outer reply is being run once for every single row that it’s effectively being joined to there.

Shannon Lindsay 24:58 Somebody calls us right now and they’re saying, I’m having issues. I’m my the performance isn’t doing what it should it I’m. It’s a disaster right now. What’s the first thing that you tell them to look for?

Steve Stedman 25:15 we’ve, we’ve had that call more times than once, many more times, and the first place I usually start is looking at blocking queries. And as we talked about earlier, the different things with blocking and blocking one of the biggest performance impacts you see, maybe related to blocking queries. So I would start by opening up database health monitor, looking at the current blocking queries and seeing, are there any queries that are currently blocking causing things to be slow right now, and more often than than not, that will end up being the culprit. Now. The next step, of course, is figuring out, well, how bad is it and how do you go about fixing it? But the first step in resolving it is at least identifying the problem and determining is it blocking and blocking. And then with that, we also have the historic monitoring and database health monitor that lets you track that blocking over time, and see, are there periods of the day that you have more blocking than other periods? Then once you’ve done that, next I would take a look at things like the weight statistics. What is your SQL Server waiting on? I’d want to look and see, is it waiting on IO or memory or CPU or network or blocking or something else. And again, that’s one of those things that database health monitor will help you find. But, and when I refer to database health monitor, anything that we’re doing a database health monitor, you can always do with just regular SQL queries as well, if you know how to go find them. But that’s one of those things we just make a little bit easier with that tool. So blocking queries is the number one thing I would check. And then, depending on the output of that and we go and look at weight statistics.

Shannon Lindsay 26:43 you’ve said the term performance customer. When you use that term, what does that mean?

Steve Stedman 26:51 Yeah, so some of the customers we work with, I mean, there’s different attributes of the customers we work with, and some of them are customers who a little bit of performance impact doesn’t really hurt them, but if they’re down for any amount of time, that hurts. And that’s an example of like more important than performance is high availability. But when we talk about performance customers, these are the customers whose system is very sensitive to any kind of a performance change. For instance, if you’re dealing with a large public website and something happens, and everything bogs down. Or if their systems are slow, that it impacts team productivity, like, let’s say you’ve got great planes or Microsoft Dynamics, and that slows down, and suddenly your finance team gets really grumpy because they’re just sitting around waiting for the application to stop spinning before they can do the next thing, and there’s nothing, nothing quite like Grumpy finance teams, because they’ll really, they’ll really let you know there’s a problem with the system. So that’s an example where performance is really important in that environment. Another is that systems that slow down production of a product. A lot of the clients we work with are factories of some kind where they’re producing some product. And you wouldn’t think that if you’re trying to figure out how much, how much volume you can get out of your factory, they should be considering SQL Server as the bottleneck. But we’ve seen several occasions where SQL Server has been the bottleneck, not really SQL Server itself, but the queries that were written in an application and that we’re actually able to speed up production for them based off of doing some performance tuning. I mean, one of them was a plywood factory, and the amount of plywood that they were able to put out every day was impacted or changed by the performance improvement that we did on the SQL Server. Now, when we work with many of these, sometimes, if they have a 10% slowdown on their system or everything, ends up being about 10% slower. That may do that may be the trigger that leads to clients or internal team or other people screaming about how bad the system is. So when we talk about performance customers, those are the ones that we work with, that we monitor and that are top priority for dealing with performance before anything else, typically. And those are the fun ones, because there’s a lot we can do with with that.

Shannon Lindsay 29:09 What can Stedman solutions offer to help with that, those kind of performance issues?

Steve Stedman 29:14 Well, there’s a lot of options there. And really, I mean, when I started Stedman solutions almost 10 years ago. I mean, the key here was that we’ll help with SQL Server. And a lot of what we help with SQL Server is performance and the options that we have available for that. One of them is our managed service offering, where we’ll do manage services with performance focus, where, for a monthly fee, we’ll help take care of your SQL servers and make sure they’re in great shape. And when those performance issues pop up, we’ll just help you take care of them, rather than having to to come up with extra budget that much to deal with it. Also with that, we’ll do what we can to make sure that we’re addressing those issues before they come up, and we’re being proactive in catching the blocking before it becomes too big of an issue. Another. Thing we do is a SQL server performance assessment, where we’ll take a two to three week project, where we’ll put a team on your system and really focus on everything performance related that we can do for that two or two or three week period, and we’ll work with you to do everything we can to get that system performing as quickly as possible. We also offer mentoring. Mentoring is one of those things where you can buy in for a four hour period, four hour block at a time, and then just let me know you need help, and we will assign a team member to work with you. And it might be something I mean mentoring is typically, we’re working side by side with the screen share and teaching and sharing what you can do to help improve the performance your system. We also have database health monitor, which is our, really, our flagship application that we use for tracking all this information. And then we also have our SQL performance classes at Stedman SQL school, and that’s one of those that will be on sale in November as part of our crazy month of November for class promotions. So I think at this point, that wraps up everything we’d had planned on the overall performance offering. Are there any questions for those who are watching it live at this point, just post them in the YouTube chat and we’re able to see that. See that here. What I’m going to do, I’m going to go on to section we have called the ask Steve any SQL topic, and this is a question that was submitted that we’re going to try and figure out how to how to fix so or how to answer. But in the meantime, feel free to post questions in the live stream, and we’ll come back and check those after I do the ask Steve questions. So the question that came in. And keep in mind, if you want to submit these questions later, and you’re not actually watching live right now, you can always email Shannon, at Shannon@stedmansolution.com with your question and ask if we would consider it for our next pod, for a future podcast, and we’d be happy to take a look at that one day. So the question that came in here is, What are SQL Server isolation levels, and how do they impact my data? Well, I want to change my screen here to display this little chart. So What are SQL Server isolation levels? Well, isolation levels are how SQL server controls transactional interaction, and keep in mind that on any SQL Server with any kind of a load, you’ve got lots of people running queries, and if you just had it so everybody could be modifying the same table at the same time, you’d end up with a lot of data corruption kind of issues. So SQL Server has these isolation levels designed to sort of impact the overall visibility or availability of data in the tables. So the first one I want to cover is what’s referred to as read uncommitted. And that’s one of those that when people put the no lock hint on query, read uncommitted is very similar to no lock but read uncommitted is doing it on a transaction level rather than a single table level. But what read and committed means is that I want to read data whether or not it’s been committed in a transaction or not, and it allows for what are called dirty reads. And dirty reads are reads where the data is currently changing and what you’re seeing there may not be the final outcome for when the query is completed, you’re seeing uncommitted changes that by uncommitted uncommitted, it might mean that they’re going to be rolled back and they may not actually exist. So you’re getting dirty data there. And with that, you can also get phantom reads. There’s a lot of options to get phantom reads. But phantom reads are basically where you query a table and say, how many of this is there, and you query again later, and it might change. Phantom reads are not nearly as bad as dirty reads, but you get a very high concurrency, and that with READ UNCOMMITTED, you get a lot of you can have a lot more people running without any kind of conflicts and but the data consistency that you run into there is very low, meaning that you may get inaccurate data. There may be some queries that you run where the same row rule shows up in the results have multiple times. That can happen if a page split is going on, or if an index is being rebuilt. So read uncommitted is one of those that if you’re in a situation where performance is more important than data accuracy, that might be an okay place to use redundant and let’s say it’s something like your favorite social media platform where you’re looking at comments. And if, if one user viewing the post sees a slightly different set of comments for a minute or two than another user sees, well, maybe redundant would be okay for that kind of situation, but if it’s something like me, I want to go look at my bank account balance, and I’m seeing data that’s not entirely accurate, that could be really confusing or really disturbing, and so redone, committed isn’t good for situations where I. Or you’re concerned about the data consistency. Okay, so the next transaction, the next isolation level, is read committed. And READ COMMITTED is the standard default on SQL Server. And what this says is that I’m going to only read data on my query that’s actually been committed. I’m not going to read data that’s changing, and I’m not going to read data, that’s that somebody has might possibly roll back another transaction. So with that, you don’t get any dirty reads with READ COMMITTED, but you still can get phantom reads, meaning this the data can change over time. With that, the concurrency, it’s not as big of a throughput as read uncommitted, but it is pretty good overall, and the consistency is pretty good overall as well. So READ COMMITTED works pretty well for most people, most of the time. Then we run into Repeatable Read and serializable, and these are two different isolation levels where they basically both ensure that once it is read that read, that it can’t be modified by another transaction. But the difference between Repeatable Read and Serializable is it Serializable make sure that the entire data range being accessed is being locked. Now, I often equate Serializable being like traffic, and if you live somewhere where there’s like 10 lanes wide on the highway like Los Angeles, and you’ve got 10 lanes there, you can get a whole lot of traffic, assuming the traffic’s moving. You get a whole lot of traffic through on that highway. But if something happens, like a presidential motorcade, or the pope shows up with the Popemobile, or something like that, where they shut down the highway for these special case uses where nobody else can use that highway at the same time, that’s kind of what I think of with serializable. Serializable is a way of saying in a database, anything that’s touched on this query completely lock it and make it so nobody can touch it, change it or read it until I’m done doing what I’m going to do here. Serializable is one of those things I’ve seen in a lot of cases where somebody accidentally turns it on, and although it does give you the absolute highest level of consistency, very rarely have I ever seen a situation where that level of consistency is going to is actually going to be a good thing compared to the performance that you lose one client, actually, I should say one, multiple clients that we worked with here had a situation where this accidentally got turned on in there was it the web dot config file and.net where it was defining your SQL server connection, they accidentally turned on serializable, which meant that everything was basically running serializable, and only it meant that one query could be running at a time on all those tables that were being touched by that query. We’re able to change that back to the default of recommitted, and that was a night and day performance improvement for the client. Next I want to talk about snapshot and READ COMMITTED snapshot isolation level. And snapshot is one of those that basically takes a snapshot of your data. It was snapshot and recommitted snapshot. They both have a pretty big impact on your Temp DB load, but READ COMMITTED snapshot isolation level or RCSi. Most of these other things are isolation levels that you turn on in a query by query basis, but READ COMMITTED snapshot isolation requires you to turn it on on a server basis, or a database by database basis, so that all queries going forward at that point can then use that READ COMMITTED snapshot isolation level, and what READ COMMITTED snapshot isolation level does? This is really cool. It basically keeps a copy of data that’s changing in Temp DB so that when you read, you’re never getting dirty reads, but you’re never being blocked by people changing the data, because you’re able to look at what that data looked like before it was being changed, or before those open transactions were were started, we worked with clients on this. In fact, I talked to one just a couple days ago, where two years ago, we turned on recon snapshot isolation level for them. And it took a system that was getting stuck or blocking almost every single day multiple times to spend on running beautifully for almost two years, and now we did have to increase some things around temp TB and do a little bit of extra checking on a couple things around READ COMMITTED snapshot isolation level, but that’s one of those that if you have a lot of locking and blocking, it’s one of those things consider taking a look at. So my recommendation here is that 99% of the time keeping your SQL server in the recommitted snapshot isolation level or the default is going to be fine, but for those outliers that 1% READ COMMITTED snapshot isolation level is generally the best option, and very rarely do we come up with scenarios where the other ones aren’t worth using. So hopefully that answers the question on what are isolation levels in SQL Server? And let me check, do we, Shannon, do you see any questions being submitted

Shannon Lindsay 39:47 I have not seen anything come through.

Steve Stedman 39:51 Okay, that’s fine. Well, just a reminder, if you want to ask a question that we’re going to feature on the podcast, just email Shannon@Stedmansolutions.com and we’ll get that in here. You to be answered. Thanks for watching our next episode podcast. We’re actually going to not be doing next week, because I’m going to be out. But two weeks after that, we’ll have the new database health monitor features, and then week after that, we’re bringing in one of our partners talk about the services provided by her company, where you can watch and listen. You can look at you can find it on YouTube, or you can go to Stedman solutions.com/podcast, and get a list of all the previous episode. I think that wraps it up at this point. Thanks, everyone. Speaker 1 40:42 You thanks Steve Stedman 40:50 for watching our video. I’m Steve, and I hope you’ve enjoyed this. Please click the thumbs up if you liked it, and if you want more information, more videos like this, click the subscribe button and hit the bell icon so that you can get notified of future videos that we create.

Visit our Podcast page for more episodes.

Contact Info

Stedman Solutions, LLC.PO Box 3175

Ferndale WA 98248

Phone: (360)610-7833